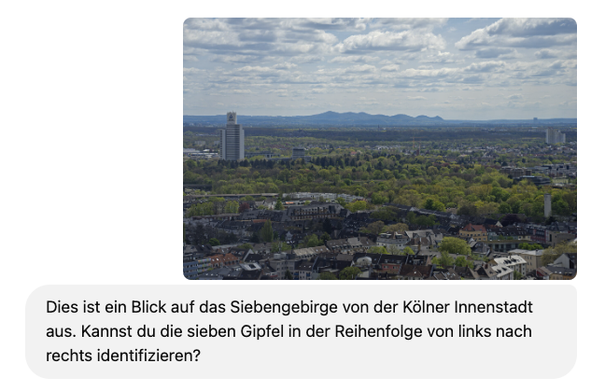

Eine Analyse der Antworten verschiedener Modelle dazu ergäbe vielleicht einen netten Post. Spoiler: Erstaunlich gute erste Antworten, aber man braucht viele Argumente, um die #LLMs von der tatsächlichen Reihenfolge zu überzeugen. Und #Reasoning durch wiederholte Generierungsschleifen wirkt mir weiterhin wie eine eher semiausgereifte Idee. Würde das jemand lesen wollen?

#reasoning

This groundbreaking revelation from the ivory towers of #academia ponders if #RL can magically transform bland #LLMs into #reasoning superstars. Spoiler alert: after endless waffle, the answer is still "TBD." Apparently, all that’s needed is a touch of wizardry from #Tsinghua & Shanghai's finest

.

https://limit-of-rlvr.github.io/ #Shanghai #HackerNews #ngated

Does RL Incentivize Reasoning in LLMs Beyond the Base Model?

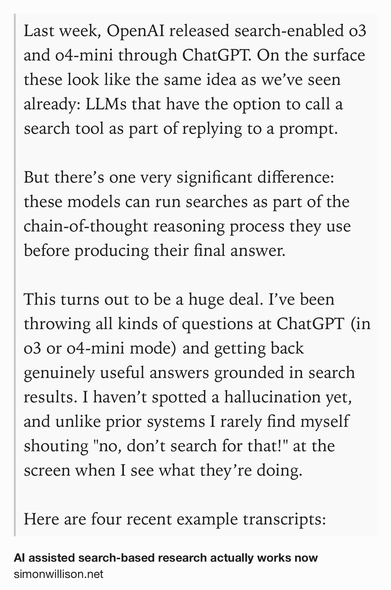

AI assisted search-based research actually works now https://bit.ly/4jleebn #AI #search #reasoning

AI's Secret Advantage - Dwarkesh Patel Podcast

#knowledge #reasoning #fyp #explore #discover

Enhancing AI trustworthiness through automated reasoning: A novel method for explaining deep learning and LLM reasoning. ~ Julia Connolly, Oliver Stanton, Sarah Veronica, Liam Whitmore. https://www.researchgate.net/publication/390844466_Enhancing_AI_Trustworthiness_Through_Automated_Reasoning_A_Novel_Method_for_Explaining_Deep_Learning_and_LLM_Reasoning #LLMs #Reasoning #ITP

"Dwarkesh Patel: I want to better understand how you think about that broader transformation. Before we do, the other really interesting part of your worldview is that you have longer timelines to get to AGI than most of the people in San Francisco who think about AI. When do you expect a drop-in remote worker replacement?

Ege Erdil: Maybe for me, that would be around 2045.

Dwarkesh Patel: Wow. Wait, and you?

Tamay Besiroglu: Again, I’m a little bit more bullish. I mean, it depends what you mean by “drop in remote worker“ and whether it’s able to do literally everything that can be done remotely, or do most things.

Ege Erdil: I’m saying literally everything.

Tamay Besiroglu: For literally everything. Just shade Ege’s predictions by five years or by 20% or something.

Dwarkesh Patel: Why? Because we’ve seen so much progress over even the last few years. We’ve gone from Chat GPT two years ago to now we have models that can literally do reasoning, are better coders than me, and I studied software engineering in college. I mean, I did become a podcaster, I’m not saying I’m the best coder in the world.

But if you made this much progress in the last two years, why would it take another 30 to get to full automation of remote work?

Ege Erdil: So I think that a lot of people have this intuition that progress has been very fast. They look at the trend lines and just extrapolate; obviously, it’s going to happen in, I don’t know, 2027 or 2030 or whatever. They’re just very bullish. And obviously, that’s not a thing you can literally do.

There isn’t a trend you can literally extrapolate of “when do we get to full automation?”. Because if you look at the fraction of the economy that is actually automated by AI, it’s very small. So if you just extrapolate that trend, which is something, say, Robin Hanson likes to do, you’re going to say, “well, it’s going to take centuries” or something."

https://www.dwarkesh.com/p/ege-tamay

#AI #LLM #Reasoning #Chatbots #AGI #Automation #Productivity

AI | OPENAI

New Reasoning AIs Hallucinate More

o3 & o4-mini outperform older models in coding & math — but hallucinate more.

On PersonQA, o3 hallucinated 33% of answers, o4-mini 48%.

No clear cause; scaling reasoning may amplify false claims.

Transluce: o3 fabricates actions like fake code execution.

More compute for LLM reasoning isn't a magic bullet. MS Research finds gains vary by model/task, costs fluctuate, & longer answers aren't always better. Key takeaway: Efficiency & verification matter.

#AI #LLMs #Reasoning

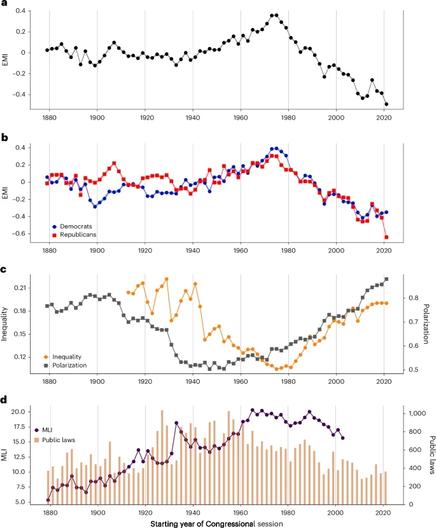

A study published by Nature shows what had led to Congress's being so ineffective:

A nation of idiots.

Andreas Schleicher, the head of education and skills at the O.E.C.D., told The Financial Times, “Thirty percent of Americans read at a level that you would expect from a 10-year-old child.” He continued, “It is actually hard to imagine — that every third person you meet on the street has difficulties reading even simple things.”

#USpolitics #education #collapse #reasoning #enlightenment #USA

https://www.nytimes.com/2025/04/10/opinion/education-smart-thinking-reading-tariffs.html

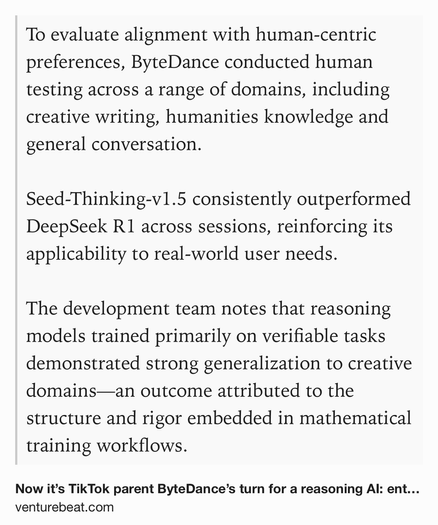

Now it’s TikTok parent ByteDance’s turn for a reasoning AI: enter Seed-Thinking-v1.5! https://venturebeat.com/ai/now-its-tiktok-parent-bytedances-turn-for-a-reasoning-ai-enter-seed-thinking-v1-5/ #AI #reasoning

From blind solvers to logical thinkers: Benchmarking LLMs' logical integrity on faulty mathematical problems. ~ A M Muntasir Rahman et als. https://arxiv.org/abs/2410.18921 #LLMs #Math #Reasoning

How University Students Use Claude

https://www.anthropic.com/news/anthropic-education-report-how-university-students-use-claude

https://news.ycombinator.com/item?id=43633383

Aside: been trialing SoTA LLM

ChatGPT, Gemini, Claude ...

https://www.counterpunch.org/2025/04/07/the-ai-power-play-how-chatgpt-gemini-claude-and-others-are-shaping-the-future-of-artificial-intelligence/

* particularly impressed w. Claude (3.7 Sonnet), DeepSeek

* most SoTA free (ChatGPT higher performing paywalled): still amazing!

* chain-of-thought reasoning / augmented responses (web retrieval: RAG) ️

* very impressive!!

* Firefox users: try the AI Toolbox extension ️

“We’re stepping into the most pro-growth, pro-business, pro-American administration I’ve perhaps seen in my adult lifetime,” gushed the hedge fund manager Bill Ackman in December...

“You don’t get fired for being bullish, but you do get fired for being bearish on Wall Street,” said Berezin.

#finance #trade #tariffs #macroeconomics #Trumpism #collapse #psychology #groupthink #rationality #biases #logic #reasoning

https://www.nytimes.com/2025/04/07/opinion/trump-stock-market-wall-street.html

CEO #SamAltman räumte ein, dass #OpenAI in Sachen #OpenSource bisher auf dem falschen Weg war und kündigte eine neue Strategie an.

Das kommende Modell soll über solide #Reasoning-Fähigkeiten verfügen und vor Veröffentlichung nach OpenAIs Sicherheitsrichtlinien geprüft werden.

https://eicker.TV #Technik #Medien #Politik #Wirtschaft Ex (2/2)

»In collaboration with #TsinghuaUniversity, #DeepSeek developed a technique combining #reasoning methods to guide #AImodels towards human preferences.« https://www.scmp.com/tech/tech-trends/article/3305259/deepseek-unveils-new-ai-reasoning-method-anticipation-its-next-gen-model-rises?eicker.news #tech #media

"Mathematics is not arithmetic. Though mathematics may have arisen from the practices of counting and measuring it really deals with logical reasoning in which theorems [...] can be deduced from the starting assumptions. It is, perhaps, the purest and most rigorous of intellectual activities, and is often thought of as queen of the sciences." – Erik Christopher Zeeman (1925-2016)

#quote #mathematics #math #maths #reasoning

»Don’t believe #reasoning models' Chains of Thought, says #Anthropic: In a new paper, Anthropic researchers tested the “#faithfulness” of #CoTmodels’ reasoning.« https://venturebeat.com/ai/dont-believe-reasoning-models-chains-of-thought-says-anthropic/?eicker.news #tech #media

![Photographic portrait of Erik Christopher Zeeman, and a quote : "Mathematics is not arithmetic. Though mathematics may have arisen from the practices of counting and measuring it really deals with logical reasoning in which theorems [...] can be deduced from the starting assumptions. It is, perhaps, the purest and most rigorous of intellectual activities, and is often thought of as queen of the sciences."](https://files.ohai.social/cache/media_attachments/files/114/291/866/020/538/066/small/a6d4e1a12856dedc.png)