在NixOS WSL上運行Nvidia和Ollama:全天候啟動您的遊戲PC上的LLM

➤ 在你的遊戲PC上輕鬆運行大型語言模型

✤ https://yomaq.github.io/posts/nvidia-on-nixos-wsl-ollama-up-24-7-on-your-gaming-pc/

這篇文章詳細介紹了作者如何在遊戲PC上使用NixOS和WSL環境,成功配置Nvidia GPU和Ollama,實現LLM模型的持續運行。作者解決了vram鎖定、WSL自動關閉以及NixOS對Nvidia的初始支持不足等問題,並分享了詳細的配置步驟,包括保持WSL運行的設置、NixOS的安裝配置、Nvidia Container Toolkit的設置以及Ollama Docker容器的配置,並整合了Tailscale以簡化網絡連接。

+ 這篇文章真的很有用,我一直想試試本地LLM,但設定起來太麻煩了。這個方法看起來更可行!

+ NixOS看起來很強大,但是學習曲線有點陡峭。不過為了能在本地運行LLM,我

#NixOS #WSL #Nvidia #Ollama #LLM #Docker

#ollama

Nvidia on NixOS WSL – Ollama up 24/7 on your gaming PC

https://yomaq.github.io/posts/nvidia-on-nixos-wsl-ollama-up-24-7-on-your-gaming-pc/

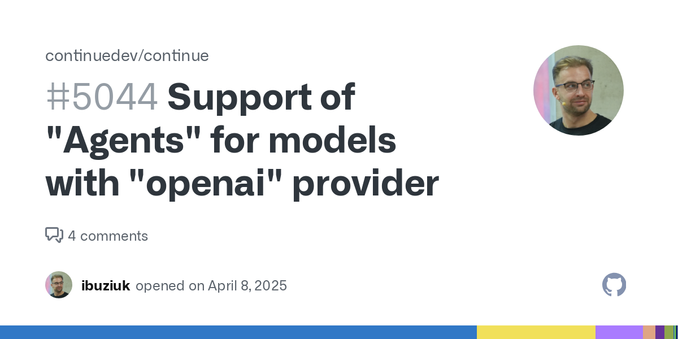

You can now use Ollama model with Continue.Dev to do “agentic” code generation in VSCode. However if you use LiteLLM to manage your model access, you won’t be able to take advantage of this feature just yet https://github.com/continuedev/continue/issues/5044

#selfhosted #ai #ollama (https://brainsteam.co.uk/notes/2025/04/10/1744283027/)

FINALLY: Folders in self-hosted n8n!

After years of working with n8n, I'm showing you how to organize your #Ollama & AI workflows with this game-changing feature.

Was passiert, wenn Claude, Gemini & #Ollama gleichzeitig antworten? Jean-Claude Brantschen zeigt, wie du alle #LLMs in #OpenWebUI steuerst – ohne Toolwechsel, mit parallelen Prompts an alle Modelle.

Jetzt lesen & nachbauen: https://javapro.io/de/remote-llms/

#DevTools #Cloud #API #DevOps

Was passiert, wenn Claude, Gemini & #Ollama gleichzeitig antworten? Jean-Claude Brantschen zeigt, wie du alle #LLMs in #OpenWebUI steuerst – ohne Toolwechsel, mit parallelen Prompts an alle Modelle.

Jetzt lesen & nachbauen: javapro.io/2025/04/09/remote-llms-open-webui

Good or bad? My laptop with a 12th gen i7-12800H and an #nvidia A1000 gpu can sustain 3.8ghz turbo on all cores, almost a 1 ghz turbo (sustained) over the 2.9ghz promise from #intel. I am using Ollama and Gemma3:27b to beat on it. GPU is a bit of a lap potato of course and hovers around 65C, while the CPU rides 96C.

Tokens per second is about 25.

I've done some #vibehosting yesterday... I couldn't be bothered investigating why #fail2ban keeps banning my IP after fetching emails from my email server, so I've decided to delegate my issues to #ollama.

I've set a knowledge base with all the necessary config and log files, etc, and asked #QwQ to investigate... Since it's a #localLLM, I had no issues submitting even the most sensitive information to it.

QwQ did come up with tailored suggestions on how to fix the problem. #openwebui

Ok this is real fun with #ollama and #homeassistant.

But I just upgraded my #homelab and now I still need a Tesla P40 to boost this fun.

Some local #LLMs tested on an average #gamingPC: https://github.com/donatas-xyz/AI/discussions/1

Hallo schlaues Fediverse, ich tauche gerade in ein völlig absurdes #Rabbithole und mein M1 Macbook hat dank #LlmStudio und #Ollama seinen Lüfter wiederentdeckt… Aktuell ist lokal bei #LLM mit 8-12b Schluss (32GB Ram). Gibt es irgendwo #Benchmarks die mir bitte ausreden, dass das mit einem M4 >48GB RAM drastisch besser wird? Oder wäre was ganz anderes schlauer? Oder anderes Hobby? Muss Mobil (erreichbar) sein, weil zu unsteter Lebenswandel für ein Desktop. Empfehlungen gern in den Kommentaren.

Nouveau post sur Firebleu Website !

J’explore aujourd’hui l’auto-hébergement d’une IA puissante grâce à Deepseek, un modèle impressionnant à tester chez soi !

Tu veux garder le contrôle sur tes données ? Ce guide est pour toi

Lien : https://firebleu.website/deepseek-et-lauto-hebergement-la-solution-miracle/

Introducing MoonPiLlama

Adam Jenkins has made a Youtube video showing how to install #MoodleBox and Ollama on a Raspberry Pi4. MoodleBox is a custom distribution of #Moodle specifically for the Raspberry Pi. However the Moodle part includes good information on generally how to get Ollama and Moodle to work together. It also includes gratuitous use of a yellow rubber duck

(Yes Pi 4 not Pi 5).

@nothingfuture I haven't dug in too deep into it (yet) but you could find more information about the training data that was used for the models they list in the Google Doc.

For instance, https://huggingface.co/microsoft/Phi-3-medium-128k-instruct was "trained with the Phi-3 datasets that includes both synthetic data and the filtered publicly available websites data with a focus on high-quality and reasoning dense properties."

#nercomp25 #AI #ollama

#Ollama #Models #LLM #Hosting #remembering #Smarter I found a way to make my Ollama environment with multiple LLMs really a lot smarter. And even better ... Ollama is not forgetting anything anymore! Article: lkjp.me/78m