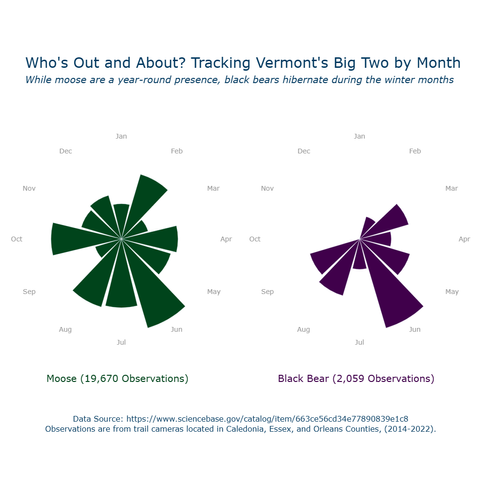

Diving into #Vermont wildlife for the #30DayChartChallenge "circle" day! Using #Python & #plotly to compare monthly #Moose and #BlackBear sightings

Data wrangled with #BigQuery and #SQL. Any guesses which animal is seen more consistently throughout the year?

#DataViz #Wildlife #RadialChart

#bigquery

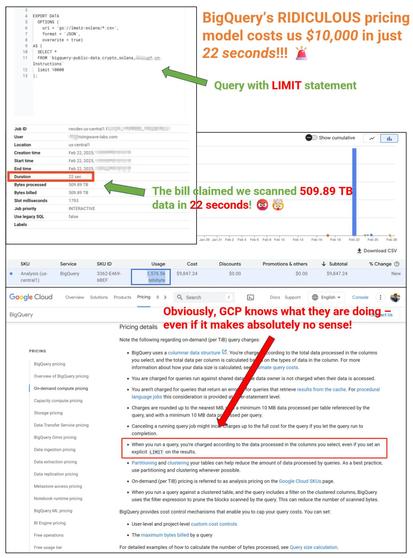

BigQuery pricing model cost us $10k in 22 seconds

#HackerNews #BigQuery #Cost #$10k #DataAnalysis #CloudComputing #PricingModel #TechNews

"Map of Python" is the digital dabbling of a cartographer, lost in the vast jungle of 500,000+ #Python packages, desperately trying to create art from JSON blobs.

But hey, at least there's #BigQuery to save the day from the horror of actually downloading data!

https://fi-le.net/pypi/ #DataVisualization #Cartography #ArtInTech #HackerNews #ngated

No more manually cross-referencing dbt docs from dev and prod

No more manually checking schemas in your data warehouse

No more manually comparing row-counts on models.

See a trend?

Read how an experienced data professional validates zero regression on a #dbt PR:

Exploring dbt for Data Transformation

The journey continues! In this part of the project, I'm learning how dbt models help automate data transformation. I'm building out models in dbt for these taxi datasets to create clean, analysis-ready data in #BigQuery. It’s fascinating to see how everything connects! #DataEngineering #dbt #GCP #ETL #DataTalksClub

Started Module 4 of #DataEngineering Zoomcamp!

Just kicked off the Analytics Engineering module and I'm diving into transforming the Green Taxi, Yellow Taxi, and FHV NY Taxi datasets loaded in #BigQuery. Excited to see how dbt can help create analytical views for better decision-making! #dbt #DataTalksClub #GCP #AnalyticsEngineering #ETL

> We are adding additional services to your project(s) to create a unified platform for AI-powered data analytics.

【BigQuery】画像から類似画像を検索!マルチモーダルエンベディングの簡単解説

https://qiita.com/te_yama/items/d320f81ddffae447b495?utm_campaign=popular_items&utm_medium=feed&utm_source=popular_items

This week! Join us on 19 February for our #CommunityCall! This month, we'll present the different avenues for accessing the #OpenAIREGraph's data (such as #API, #Zenodo, #GoogleCloud, etc.) with a brief recap of our #BigQuery training from October. Register now! Registration and agenda in link below,

Register now https://tinyurl.com/y27vxyrp

DataTalksClub's Data Engineering Zoomcamp Week 3 - BigQuery as a data warehousing solution.

For this week's module, we used Google's BigQuery to read Parquet files from a GCS bucket, and compare querying on regular, external and partitioned/clustered tables.

My answers to this module: https://github.com/goosethedev/de-zoomcamp-2025/blob/ecb1f1f3fc69b8d10703eb07328567dab2acf688/03-data-warehousing/README.md

Join us on 19 February for our #CommunityCall! This month, we'll present the different avenues for accessing the #OpenAIREGraph's data (such as #API, #Zenodo, #GoogleCloud, etc.) with a brief recap of our #BigQuery training from October. Register now! Registration and agenda in link below,

Register now https://tinyurl.com/y27vxyrp

FFS. Turns out (after I built a feature) that you can't supply a schema for BigQuery Materialised Views.

> Error: googleapi: Error 400: Schema field shouldn't be used as input with a materialized view, invalid

So it's impossible to have column descriptions for MVs? That sucks.

Whilst migrating our log pipeline to use the BigQuery Storage API & thus end-to-end streaming of data from Storage (GCS) via Eventarc & Cloud Run (read, transform, enrich - NodeJS) to BigQuery, I tested some big files, many times the largest we've ever seen in the wild.

It runs at just over 3 log lines/rows per millisecond end-to-end (i.e. inc. writing to BigQuery) over 3.2M log lines.

Would be interested to know how that compares with similar systems.

Google Analytics updates BigQuery Export with enhanced traffic source analysis: Learn about the latest Google Analytics BigQuery export enhancement adding session-scoped traffic source fields for deeper insights. https://ppc.land/google-analytics-updates-bigquery-export-with-enhanced-traffic-source-analysis/?utm_source=dlvr.it&utm_medium=mastodon #GoogleAnalytics #BigQuery #DataAnalytics #TrafficSource #DigitalMarketing

Google did finally post an outage status report

After several iterations, I think I've finally got my log ingest pipeline working properly, at scale, using the #BigQuery Storage API.

Some complications with migrating from the "legacy" "streaming" (it's not in the sense of code) API have been really hard to deal with e.g.:

* A single row in a write fail means the entire write fails

* SQL column defaults don't apply unless you specifically configure them to

* 10MB/write limit

I rewrote the whole thing today & finally things are looking good!

Qiita - 人気の記事

Qiita - 人気の記事