How the GAI Assessment Debate Has Led Us in the Wrong Direction

The common thread running through these debates is a fixation on outputs. GAI captured attention because of the ease with which eerily human outputs could be produced in response to natural language prompts. They may have been profoundly mediocre, at least initially. But it was still a remarkable discovery liable to unsettle the self-conception of those who work with text for a living. In fact it’s hard not to suspect this often underpinned the determination exhibited by many to explain away these automated texts as mediocre. The concern about academic integrity, what I describe in Carrigan (2024: ch 1) as the great assessment panic, rested on a similar assumption. It was now possible for students to substitute a human generated response to an assessment task with a machine generated response, creating an urgent need to find a way to distinguish between them.

There has thankfully been a widespread recognition that detection tools are unable to authoritatively identify machine generated text. They might detect patterns in writing which could be statistically associated with the use of GAI systems but they might also be associated with the style of those who are writing in a second or third language. Their demonstrated propensity to trigger false positives needs to be taken extremely seriously, because assuming a student of trying to cheat who has done no such thing must surely be weighed up against letting students through the net who have relied on GAI to produce their work.

The fact that one of the major detection tools has pivoted towards a water-marking model, effectively asking students to self-surveil the writing process in order to demonstrate their contribution to it, stands as a tacit admission to these limitations. There are certainly flags featuring in texts which should lead to a request for an explanation from a student, ranging from leaving what appears to be part of the prompt in the text or functional parts of the conversational agent’s response though to departures from assigned reading, odd stylistic fluctuations or hallucinated references. But the idea there is some conclusive means by which we could determine machine generated text, as opposed to a balance of evidence informing the decision to ask the student about their authorship, has rapidly unravelled in ways that can still feel troubling even to those who struggled to see how such an outcome could be possible from the outset.

It is slightly too easy to respond to this scenario by suggesting that assessment practice has to change and that perhaps the essay questions most vulnerable to machine generated responses always had their limitations. As I’ve argued previously, we’re now being forced to face limitations that were exposed by an essay mill industry which itself was facilitated by an earlier wave of digitalisation, in the sense that the scale it reached depended on platforms which could bring together buyers and sellers from across the globe (Carrigan 2024: ch 1).

But the idea that problematic use of GAI can be avoided entirely through shifting to more authentic and processual forms of assessment, involving ‘real world’ tasks in which students make things with assessment potentially informed by contextual knowledge of their progress through a module, should be treated carefully. Not least of all because there’s probably only so many podcasts or posters you should ask a student to do each year. But it goes deeper than a lack of creativity about what these new assessments should entail and the corresponding problem of student boredom.

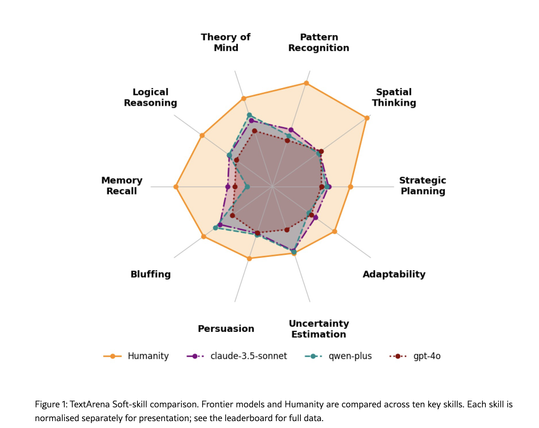

Kay, Husbands and Tangen (2024) suggested plausibly that the rapidly emerging “cottage industry … promising to translate traditional assessments into supposedly generative-AI proof formats … greatly underestimate the power of current LLMs”. Not only current LLMs but the ones which will be released in the near future. Since they published this article in early 2024, ChatGPT released their latest GPT-4o, Anthropic released Claude 3 Opus which was rapidly superseded by Claude 3.5 Sonnet and Alphabet released further iterations of their Gemini model. I’m writing these words in July 2024. By the time I finish this book, let alone by the time you read it, there will be further models with expanded capacities. This is unlikely to be an indefinite technological expansion of the kind claimed by evangelists who believe that ever increasing model sizes will eventually lead us to the fabled artificial general intelligence (AGI). But the expansion of practical capabilities is likely to continue, as these systems are refined as software, even if the technological development of LLMs eventually stalls.

The problem is that, as Mollick (2024) has pointed out repeatedly, a working knowledge of these capabilities is limited to those who are heavily engaged with them in an applied context. I’ve been using Anthropic’s Claude on a daily basis for well over a year at the time of writing, with a specific commitment to exploring how it can be used as part of my work and identifying new uses which might be valuable to other academics. Inevitably I’m more sensitive to the changes in the successive models than someone who occasionally uses it for specific purposes or who has experimented for a period of time then set it down. In fact I’m slightly embarrassed to admit what an exciting event it is for me at this stage when Anthropic release a new Claude.

There is a rapidly developing knowledge gap between those who are using LLMs in a sustained hands-on way and those casual users or non-users who are simply following at a distance. This means that decision making in higher education risks lagging behind the current state of practice, including our sense of what LLMs could and could not be used for by students. It would be a mistake to imagine our students are ‘digital natives’ who are intrinsically attuned to these developments in a way that leaves them much better able to exploit the new capabilities which come with each successive model (Carrigan 2021). But there clearly are students who are doing this and there are student user communities, found in places like Reddit, where knowledge of this is being shared.

It is difficult to estimate how wide such a student group is and my experience has been that most students, even on the educational technology master programme I teach, restrict themselves to using ChatGPT 3.5. Even so this knowledge gap means that we should treat our assumptions about what LLMs can do, as well as what at least some of our students can do with them, with a lot of caution. It probably isn’t possible to design an assessment which a student couldn’t in principle complete with the support of an LLM. It might be that most students couldn’t or wouldn’t do this. But the idea that we can definitely exclude LLMs from assessment through design ingenuity is likely to distract us from the real challenge here.

Instead of thinking in terms of outputs we need to think about process. This will help us move away from an unhelpful dichotomy in which entirely human-generated outputs are counterpoised to entirely machine-generated output. Rather than conceiving of the technology as somehow polluting the work of students, we could instead consider how the process through which they have crafted the work leads to certain kinds of outcomes which embody certain kinds of learning. This leaves us in a murky grey zone in which hybrid work, combining human and technological influences, should be taken as our starting point. Authentic forms of assessment are valuable because they provide opportunities for students to demonstrate their thinking process, not just their final product—showing how they approach problems, make decisions, and integrate various sources of information including, potentially, AI tools.

One of the first things I did when tentatively exploring ChatGPT 3.5 was present it with a title from a recent essay I had marked, in which a student critically reflected on their experience of using Microsoft Teams with a view to understanding the opportunities and limitations of the platform in an educational setting. It immediately produced a piece of writing which was blandly competent, self-reflecting in a way which was coherent but likely to receive a low B at most. I remember sending a message to a friend confidently stating that the work it produced was mediocre. I’m struck in retrospect at how loaded this confidence was, as if immediately feeling I could place it in a marking scheme gave me agency over what was portended by this development.

It’s a reaction I could see through the immediate discourse within the sector, as a frenetic anxiety which imagined the teaching and learning bureaucracy being near immediately washed away was combined with a confident dismissal of the mediocrity of the writing which was produced. As Riskin (2023) put it in a reflection on what she believed to be AI-student generated essays, these words often read like the “literary equivalent of fluorescent lighting”.

To confidently dismiss these outputs as mediocre tended to gloss over the troubling fact of how predominant mediocre writing is from our students, as well as in wider society. The bland competence which tended to characterise these early experiments with ChatGPT, the rhythm and resonance which betrayed a lack of human engagement with the task, was perhaps uncomfortably familiar to many academics. It’s the style of writing which results when the primary motivation of the writer is meeting requirements they have been set, jumping through hoops in order to achieve a specific goal. It’s the writing which ensues when extrinsic motivations predominate over intrinsic motivations, accomplishing something in the world by following a procedure rather than a creative process in which something is expressed.

It would be naive to imagine students might exclusively be motivated by the latter, in a context where studying involves taking on an enormous amount of debt and a potentially bleak social and occupational future awaits them at the end of the line. But the poetics of instrumentality are troubling, amongst other reasons, because they confront us with our own role within that system. They remind us of students not enjoying their writing, much as we ourselves often do not enjoy it. The fluorescent lighting of mechanically written work, intended to serve a purpose rather than exhibit meaning, is not confined to our students.

What concerns me is how many academics seem to have left matters here. Either experimenting with ChatGPT 3.5 themselves, or seeing the experiments of fellow academics, the matter seemed settled. The problem with such a view is not only the radical expansion in capabilities with successive waves of models. This is often expressed in terms of the parameters involved: escalating billions, through to trillions with Claude 3, which might have rhetorical effects if we could grasp what either the billions or the parameters mean concretely. This quantification offers little insight for most potential users about how the models are advancing.

In reality it is difficult to understand the expanding horizons of what they can do unless you are immersed in their daily use within a specific context. This is still such an unusual position to be in that it can be difficult to convey this understanding to colleagues who are undertaking their work without the benefit of a GAI assistant. In fact it might be prudent to keep this strange collaboration to yourself because there remains a lack of consensus in any profession as to what constitutes appropriate or inappropriate use of these technologies. Furthermore, there are such vast number of regulatory issues related to their use within workplaces that many employers would prefer to ensure they are not used, even if there is little way for them to enforce this decision when employees routinely work from home and use their own devices.