Crowdstrike published a faulty update. Causes Windows to bluescreen. Driver is C-00000291*.sys. Will cause worldwide outages. Thread follows, I suspect.

I am obtaining a copy of the driver to see if malicious or bad coding, if anybody else checking let me know.

If anybody is wondering the impact of the Crowdstrike thing - it’s really bad. Machines don’t boot.

The recovery is boot in safe mode, log in as local admin and delete things - which isn’t automateable. Basically Crowdstrike will be in very hot water.

Favour to IT folks fixing - could you please copy the C-00000291*.sys file to somewhere and upload it to Virustotal, and reply with the Virustotal link or file hash? It's still unclear if the update was malicious or just a bug.

I've obtained copies of the .sys driver files Crowdstrike customers have. They're garbage. Each customer appears to have a different one.

They trigger an issue that causes Windows to blue screen.

I am unsure how these got pushed to customers. I think Crowdstrike might have a problem.

For any orgs in recovery mode, I'd suspend auto updates of CS for now.

If anybody is wondering, the update was delivered via channel file updates in Crowdstrike.

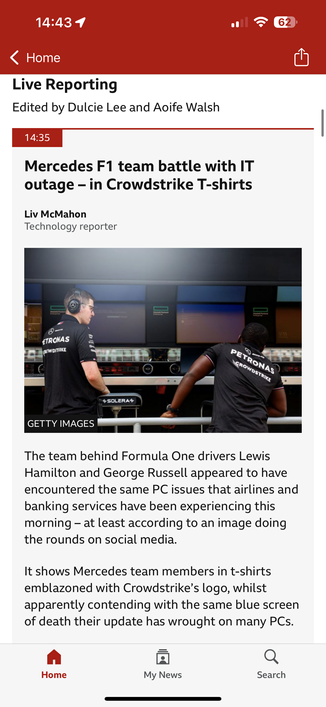

BBC tracker (they mix up an earlier Microsoft outage, what they're actually tracking is the Crowdstrike issue) https://www.bbc.co.uk/news/live/cnk4jdwp49et

The .sys files causing the issue are channel update files, they cause the top level CS driver to crash as they're invalidly formatted. It's unclear how/why Crowdstrike delivered the files and I'd pause all Crowdstrikes updates temporarily until they can explain.

This is going to turn out to be the biggest 'cyber' incident ever in terms of impact, just a spoiler, as recovery is so difficult.

CrowdStrike's shares are down 20% in pre-market.

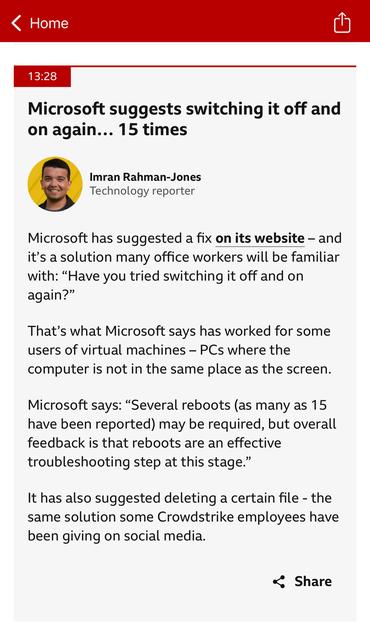

I'm seeing people posting scripts for automated recovery.. Scripts don't work if the machine won't boot (it causes instant BSOD) -- you still need to manually boot the system in safe mode, get through BitLocker recovery (needs per system key), then execute anything.

Crowdstrike are huge, at a global scale that's going to take.. some time.

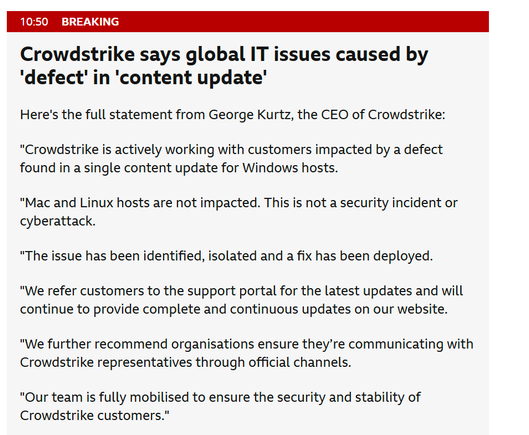

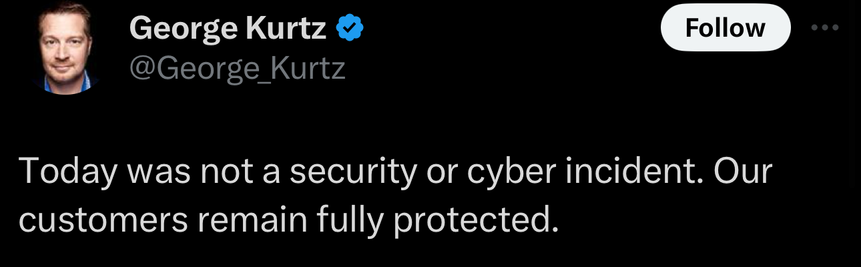

Crowdstrike statement: https://www.bbc.co.uk/news/live/cnk4jdwp49et?post=asset%3A0c379e1f-48df-493c-a11a-f6b1e3d1eb63#post

Basically 'it's not a security incident... we just bricked a million systems'

For anybody wondering why Microsoft keep ending up in the frame, they had an Azure outage and- this may be news to some people- a lot of Microsoft support staff are actually external vendors, eg TCS, Mindtree, Accenture etc.

Some of those vendors use Crowdstrike, and so those support staff have no systems.

But MS isn’t the outage cause today.

By far my fave thing with the Crowdstrike thing is Microsoft saying to try turning impacted PCs off and on again in a loop until you get the magic reboot where CrowdStrike updates before it blue screens.

lol Microsoft have put ‘reboot each box 15 times’ on its website

The chuckle brothers at NoName attempting to claim they caused the incident. To be super clear, NoName can barely DDoS a bike shed website, and once asked me to make their logo in Minecraft.

Probably the funniest BBC news update so far (they’ve cleared the airways for this incident).

BBC News at 6 is leading the entire show with this. (They asked me to appear but I was slightly busy).

For the record I spent much of the day trying to tell people it isn’t a Microsoft issue.

When I get successfully DDoS’d it’s both a security incident and I’m not protected…

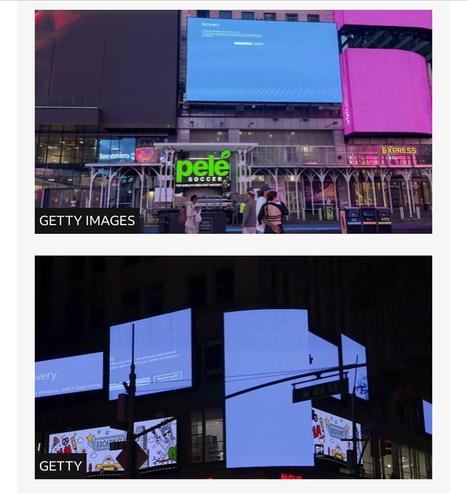

Billboards in Times Square blue screen of deathing. Nice way to find out which orgs use Crowdstrike, this

Source is BBC News, if anybody wondering.

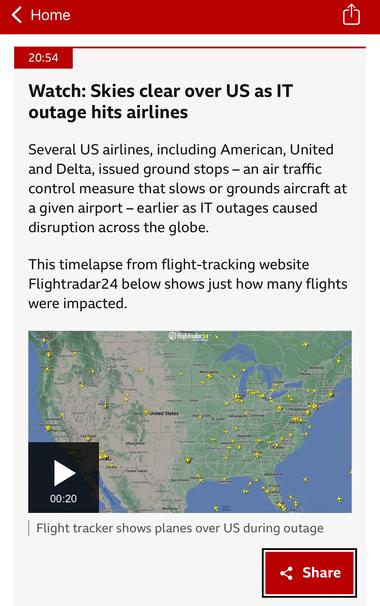

Crazy video of flights being ground stopped across the US earlier today, due to the CrowdStrike issue. https://www.bbc.co.uk/news/live/cnk4jdwp49et?post=asset%3Ae7676a84-628c-4830-ba22-3b86a0d7de4c#post

CrowdStrike have effectively a mini root cause analysis out

Pretty much as everybody knows, they did a channel update and it caused the driver to crash.

If they blame the person who did the update.. they shouldn’t, as it sounds like an engine defect.

https://www.crowdstrike.com/blog/technical-details-on-todays-outage/

For the people thinking ‘shouldn’t testing catch this?’, the answer is yes. Clearly something went wrong.

This isn’t CrowdStrike’s first rodeo on this, although it is the most severe incident so far.

Eg just last month they had an issue where a content update pushed CPU to 100% on one core: https://www.thestack.technology/crowdstrike-bug-maxes-out-100-of-cpu-requires-windows-reboots/

Truthfully these issues happen across all vendors - I’ve had my orgs totalled twice now by AV vendors, one while I was on holiday abroad and had to suspend said holiday.

@GossiTheDog someone on HN also reported similar earlier boot loop on Linux